First View on sprites.dev from fly.io

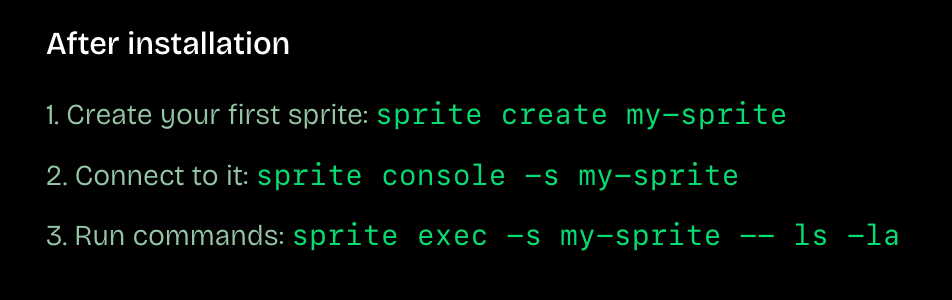

Yesterday I read Code and Let Live, an article covering fly.io's new(ish) Sprites offering. Sprites (sprites.dev) offers persistent VMs that feel like ephemeral VMs—offering ~1s latency to fresh shells, state snapshotting, and a CLI that makes remote code execution a breeze. I created an account, received an API token, installed the Sprites CLI, then ran the example quick-start commands:

Since AI is hot right now, my first task was to call out to the OpenAI API from this machine. So I:

- Installed uv

- Created a new OpenAI API key

- Added this API key to my sprite's environment variables

- Used simonw's llm CLI tool to call ChatGPT

Here are the commands I ran:

curl -LsSf https://astral.sh/uv/install.sh | sh

export OPENAI_API_KEY="..."

uvx llm "make a python fib function" > fib.py

vi fib.py # edit the file to only contain output code.

sprite exec -s my-sprite -- /home/sprite/.local/bin/uv run "/home/sprite/fib.py"Suddenly, I have a remote code execution environment!

Future Work

I am doing a lot of travel nowadays and often find myself in low-connectivity environments. So many websites like to attach really heavy response payloads that—while ordinarily taking O(100ms) to load on American networks—take many seconds to load on European connections. I'd like to create a tool that acts as an intermediary. It will load web content on my behalf and serve me stripped down versions of this content in a manner that respects my bandwidth capacity.